Oops, We Did It Again! - Expert Opinion on LLM Security

By Christiaan Ottow (CTO, Northwave)

Introduction

Large Language Models (LLMs) are here to stay – but aren’t we repeating the same architectural decision that created so many security problems in the past?

Early in our history with computers, we experienced the problems that arise when other people take control over your system and use it for malicious purposes. Viruses, worms and malware have plagued us for decades now because of vulnerabilities inherent to the way we designed computer systems.

But aren’t we doing the same thing again with how we design and build LLMs? In this article we look at this fundamental design problem in our computer systems and explore how it applies to the design of current LLM-based systems.

Mixing Data and instructions?

Computers execute instructions on data. Everything a computer does, from showing pixels on a screen to transmitting video across a WiFi link, comes down to that. For example, when executing “2 + 1” a computer will execute an “addition” instruction on two pieces of data: the values “2” and “1”. It will store this in bytes, where some bytes represent “addition”, some represent “2” and some “1”. Those bytes are stored in memory together, transmitted on networks, and so on. Conventions indicate which bytes are instructions (“addition”) and which are data (“2”, “1”). Simple instructions like adding, subtracting, and moving pieces of data around form the basis of all computing.

However, problems arise when this data can be influenced by attackers in a way that affects the distinction between instructions and data. We typically let outside sources influence pieces of data. Loading a web page shows you a large amount of data on screen that comes from outside sources. All that data was processed by your computer in potentially dangerous ways. We’re careful in letting outsiders influence instructions and we’re a bit more careful when we download executable programs from online sources. However, even in displaying a webpage, data and instructions are mixed–the webpage contains tons of instructions on how to render the webpage data. Mixing data and instructions, however, sometimes makes it hard for the computer to distinguish between the two.

And that’s exactly what so many types of vulnerabilities have in common. They all stem from the architectural choice that we make, time and again, to mix data and instructions. In computer memory, processor instructions are mixed with user data. This causes buffer overflows, allowing someone who can input user data to influence the processor instructions. Consequently, the attacker gains control over the program just by entering data.

Computing then moved to the web, and so did this problem: in SQL statements that applications use to query a database, the SQL commands are mixed with the data you want to store or retrieve, leading to the same confusion–known as SQL injection. XSS has the same issue in the HTML context. Web applications have other vulnerability classes, like authorisation checking and race conditions, but the mixing of data and instructions is especially pernicious. It’s really hard to solve, unless you can clearly make the distinction between data that is “just data” and data that is “instructions to influence control flow” and maintain that boundary.

Now, enter LLMs, the AI models that underly ChatGPT, Copilot, NotebookLM, and all those other cool new AI applications. An LLM is basically a trained model that receives text and reacts with the text that relates most closely with the input text. As such, it doesn’t reason or think. Its model of the universe consists of statistical relations between tokens (parts of words). This gives us great applications and opportunities, because it turns out that if you train a model on a large part of the internet, and you build wrappers around it so it “understands” conversations and has some safeties, and you have a few thousand humans tweak manually, you get something that is really useful in our daily work.

A prompt: data or instruction?

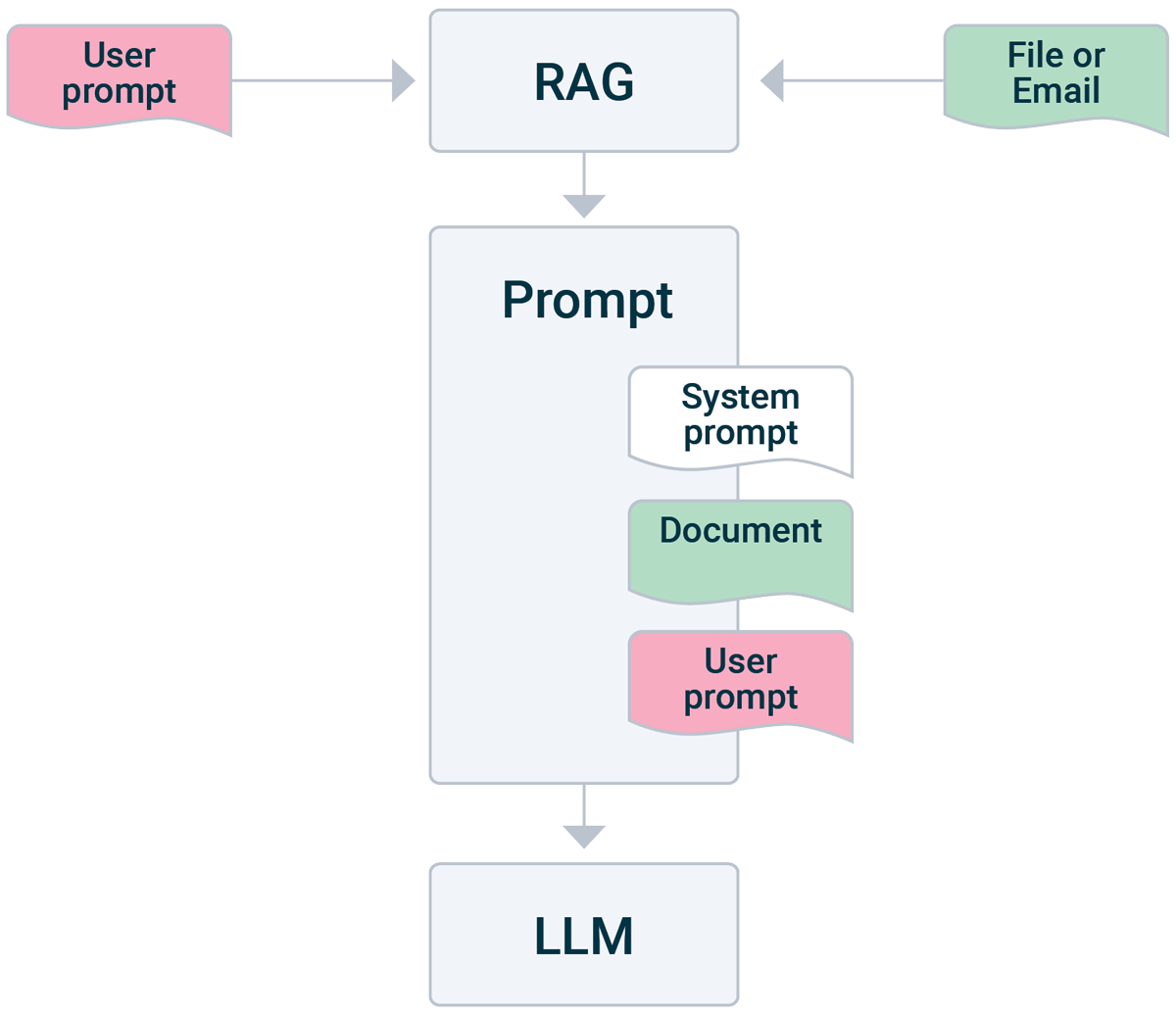

But… let’s look at how the design of LLMs fits our security dilemma of mixing data and control. To get an LLM-based application to say something, you send it a prompt. All the application does is feed the prompt through its LLM, token by token, which generates tokens in response, which are then moderated by the application’s conversation-awareness layer (or it would never stop generating). Different LLMs exist for different purposes, and some applications take a lot of context information into account that they also feed to the LLM (so-called RAG applications). These RAG applications have an LLM core but are able to answer questions more specifically to your context by incorporating other data sources in real-time. For instance, Microsoft 365 Copilot has access to your emails and Sharepoint documents and uses those as context in a very smart way1 to give useful answers to your prompts. Other examples are applications that first browse the web (like ChatGPT) or read some documents you feed it, before consulting the LLM. However, under the hood, the LLM doesn’t know which information is “context” (web pages, files, emails) and which is “your prompt”. The relevant context information is submitted to the LLM together with your prompt and the LLM responds to the whole of context and prompt. Figure 1 shows what this flow looks like.

Figure 1: How a RAG builds a prompt

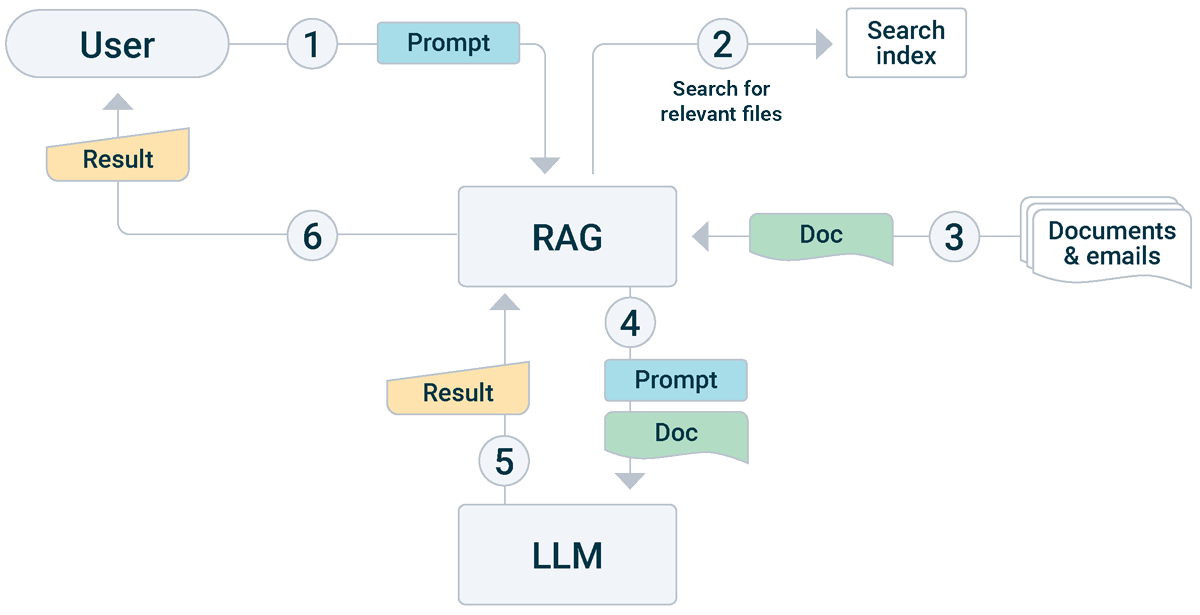

A typical RAG application will first search for relevant context information, like web pages or mails from your mailbox or documents from your folders and retrieve that information. It then builds a huge block of text, consisting of the system prompt and this context information, appends the prompt you gave, and feeds that to the LLM. The LLM starts generating text in response from its model, and you get the response back. Figure 2 shows this flow.

Figure 2: Flow of a RAG LLM conversation

This is where things get tricky. From the previous examples in our history with computers, we’ve seen what happens if data and instructions are mixed. And indeed, this proves to be a problem with LLMs as well. In the past months, some very interesting research has been published on what is now called Prompt Injection Attacks, where an attacker influences the prompt given to an LLM and thus influences what response you get to see or what actions the LLM takes (if it’s capable of those). See IBM’s general description of prompt injection attacks and Zenity’s deep dive into a special type of prompt injection attack that demonstrates its effect.

How can this be abused?

What does this mean in practice? Well, if you use an LLM that reads data that can be influenced by an attacker, then an attacker could influence the prompt. Take Copilot again: since it reads emails, someone could craft a special email that, when used by Copilot in a prompt, influences the response of that prompt. Copilot includes the mail into the prompt if it believes it is relevant to the question you ask it. You don’t even need to open the mail; it just needs to be in your mailbox.

Why is that bad? In the Zenity article above, the attacker demonstrates that instead of giving the right bank account number for a given customer, a different response (with different bank account) can be returned. If you include LLM queries in an automated process, this could become very tricky very fast. It becomes even more dangerous if the LLM that reads the untrusted input can also perform actions, like searching the web. The LLM could be persuaded to send the contents of the prompt (including documents) to a website, exfiltrating data to the attacker. Or, depending on what actions the LLM can take, do other things. A great overview of this type of attack is described here2.

![LLM-Figures-3[57] LLM-Figures-3[57]](https://northwave-cybersecurity.com/hubfs/LLM-Figures-3%5B57%5D.png)

Figure 3: Attacker exfiltrating data by manipulating the prompt indirectly

Architectural weakness

Can we solve this? The design of LLMs makes this even more difficult than we saw previously with computer memory and SQL statements. In computer memory, at least we have a clear distinction initially between data and control. We store these two in different memory regions, and while it’s the same virtual memory space, protections around keeping this distinction have become more effective in recent years. With SQL statements, it’s even easier, if the query is construed taking the difference between data and control into account. With LLMs however, there is no distinction between data and control. By nature, the LLM just responds to text, and your so-called prompt is as much data and control as are the documents and web pages that are read. While we talk about context and prompt, these actually don’t exist in LLM context–we just feed the LLM a large chunk of text (that we see as context and prompt) and the LLM responds. I don’t see a fundamental way to solve this issue in the design.

What can we do?

So, we must resort to extra checks and safeties. We shouldn’t let an LLM have access to confidential data and perform actions in the outside world. For instance, Microsoft doesn’t let Copilot read your corporate data and browse the web, you need to choose before you interact which of the two options you want. That goes a long way towards mitigating this issue but is not watertight and is a severe limitation on the usefulness of LLMs going forward.

In the end, you want an LLM to be able to access the data that is relevant for your question, whether it’s online or in your documents, and perhaps you want the LLM to already prepare an email and place it in your drafts folder. Fast-forward a few years and you want the LLM-based productivity assistant to autonomously perform all sorts of tasks for you.

So, what then? As Zenity CTO Michael Bargury says (see article linked previously), we will end up with an EDR-like approach to combating bad behaviour by LLMs (under the influence of attackers), in a cat-and-mouse game, as long as we don’t have that distinction between data and instructions. Some software will have to look at all the data going into the prompt and decide if it influences the response in a way that is likely contrary to your wish as a user. And the first products doing exactly that have arrived already3.

While it’s the best we have now, it is also a brittle approach, as we see with EDR. Behavioural patterns and detection rules are developed, and attackers find a way around them. This game of cat and mouse has already begun. You can read more at this link for a great first example of the way hackers find their way around limitations of for instance Copilot, and then Microsoft closing those specific gaps.

Conclusion

For now, this means that we should be mindful of how and where we implement LLMs. They are great, make good use of them, but don’t let them go near confidential data and reach out to the internet. If you develop a solution that uses an LLM, please actively consider who can feed data into the prompt, directly or indirectly, and shield your LLM accordingly. Currently, my worry is more with all those products and services out there that are embedding LLM features and RAG systems without paying proper attention to this issue. Engineers of those products should pay close attention to this developing research, and make sure to have proper boundaries in place in their own implementations. If you’re unsure how to do this, you can consult with our offensive security specialists on best practices and security checks on your machine learning system.

If you use an LLM, be careful what you give it access to and don’t take its answers for truth. Especially if the response influences outcomes in the real world that are important to you (making a decision, transferring money), don’t use the LLM as unverified single source.

In the end, we’ll have to learn how to safely use LLMs, and builders of AI-based applications will have to do a lot more threat modelling and protection engineering than they’re used to. If we combine security-by-design in our engineering practices with the right level of runtime protections on AI-based systems, we can benefit tremendously from the benefits that these systems bring.

We are here for you