Exploiting Enterprise Backup Software For Privilege Escalation: Part Two

By Alex Oudenaarden - 09/07/2024

Intro

Welcome back to our blog series on the kernel driver vulnerability landscape, where we aim to eliminate as many kernel driver zero-days (leading to privilege escalation) as possible. We hope these blogs help you with finding kernel driver zero-days to prevent future exploitation as well.

This is the continuation of our exploration into two critical vulnerabilities we found in Macrium Reflect. In this instalment, we delve deeper into the practical aspects of exploitation, building upon the groundwork laid in part 1. If you haven't already, we recommend reading it. That is where we dissected the vulnerabilities and established a foundation for this blog's journey into the depths of memory corruption in the kernel. In this blog, our focus is on understanding and utilizing multiple kernel heap exploitation primitives to build an effective exploit. By spending additional effort into finding a read primitive after finding a write primitive, we have opened up additional avenues for exploitation.

We invite you to take the dive into the technical intricacies of the exploitation process with us and see how we develop a versatile and consistent proof of concept exploit for escalating our privileges through a kernel driver.

Exploitation Primitives

In the world of vulnerability exploitation, the journey often begins with translating found vulnerabilities into reusable primitives. These primitives serve as the building blocks for crafting exploits. Let's start by creating some wrappers for the vulnerabilities that will allow us to repeatedly interact with each vulnerability.

Read Primitive

The first one is the read primitive. This one is achieved through state corruption and involves two essential steps to abuse:

1. Buffer Allocation: This step involves allocating memory on the kernel heap from which we eventually perform an out of bounds read. It's crucial to note that we have full control over the size of the buffer. Additionally, one major drawback is that this particular allocation can happen only once per restart. The allocation function prototype for the read primitive is as follows:

2. Reading from Buffer: Once the buffer is allocated, we can perform read operations to extract data from kernel memory. We have to be cautious when reading to avoid accessing unallocated memory regions, as this can lead to a system crash. This function allows for repeated reads from the one kernel heap allocation, enabling us to read kernel heap memory following the allocation. The prototype for this operation is:

Write Primitive

The write primitive, a counterpart to the read primitive, enables us to modify the contents of the kernel heap, facilitating actual changes to system state. This primitive also consists of two main components:

1. Buffer Allocation: Similar to the read primitive, the write primitive begins with allocating memory space on the kernel heap. Unlike the read primitive, however, the write primitive allows for repeated allocations. This will prove to be crucial later. The allocation function prototype mirrors that of the read primitive:

2. Writing Beyond Buffer Bounds: Writing beyond the buffer's bounds is a more complex operation, involving the following steps:

- Create a file containing the bytes intended to overflow the buffer.

- Allocate the write buffer and specify the name of the file created in the previous step.

- Trigger the Windows mount manager to overflow the buffer with the contents of the file by calling ReadFile() on the device.

The Exploit in Theory

I would say the goal in vulnerability research is to find vulnerabilities that can lead to some sort of privilege escalation. Ideally from user to kernel or from remote to local. However, it's common to halt at the discovery of vulnerabilities and theorise potential impacts without validating them through real-world exploitation. This exploit attempt aims to bridge this gap by challenging ourselves to verify the theorised impact through an actual exploitation exercise.

Another objective of this blog is to create a reusable method for exploitation when facing both read and write heap buffer overflows. While this proof of concept targets a token object, the methodology discussed here can be adapted to target various other kernel objects.

The Kernel Heap Allocator

Before we dive into the practical aspects, let's briefly review the Windows kernel heap manager. Since version 19H1, the Windows kernel has transitioned to using the segment heap for memory management, departing from the old kernel heap allocator. The segment heap allocation rules are more complex than the old memory-manager's, so it's advisable to familiarise yourself with them if attempting this process independently.

For now, it's important to understand that the allocation algorithm varies depending on the size of the allocation. Allocations smaller than 0x200 bytes end up in buckets in the low fragmentation heap, with somewhat randomized allocation locations, making effective heap spraying challenging. Conversely, allocations above 0x200 bytes and below 0xFE0 bytes are handled by the Variable Size allocator, operating akin to a traditional best-fit allocator with a conventional free list. You can read more on this here and here.

Given our additional requirement for two allocations due to the presence of two kernel heap overflow vulnerabilities, targeting the more straightforward variable size allocator is preferable. This should make it easier to allocate the two allocations for the primitives in close proximity. Therefore, we've selected the TOKEN object. A token object, being roughly 0x700 bytes in size, falls nicely between the 200h to FE0h byte boundaries of the variable size allocator. Additionally, the token object is a very high value target, allowing us to manipulate a process' privileges directly. With our target object selected, the next step is to theorise how we can manipulate the kernel heap into an exploitable state.

The Plan of Attack

Our goal is to use the write primitive to overwrite the Privileges field in the token object, thereby granting ourselves maximum privileges. To achieve this, we must overflow the write buffer into the token object, which requires these allocations to be in close proximity. When overflowing into the token object, it's crucial to maintain the integrity of the fields preceding the privileges field. Any corruption of these fields can destabilize the token state, potentially crashing the system. This is why we need the read primitive.

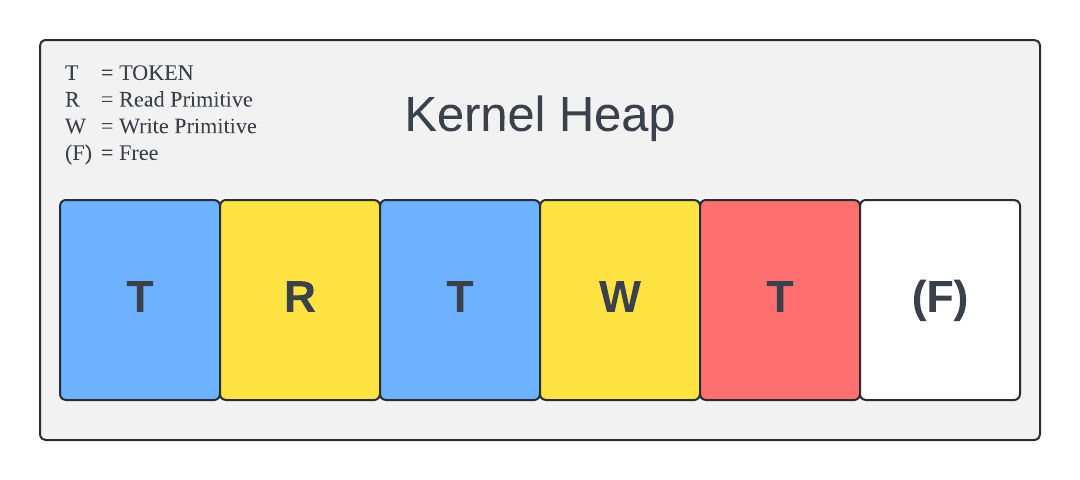

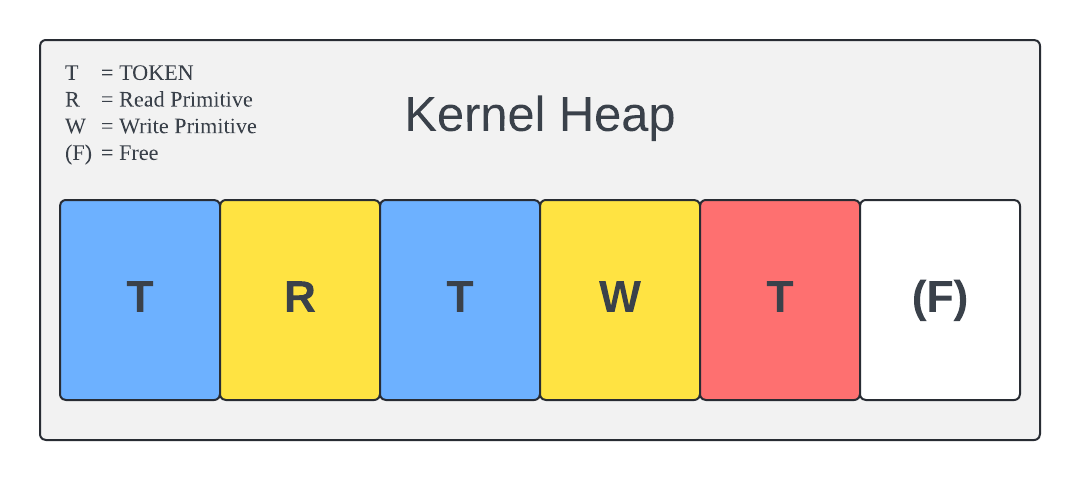

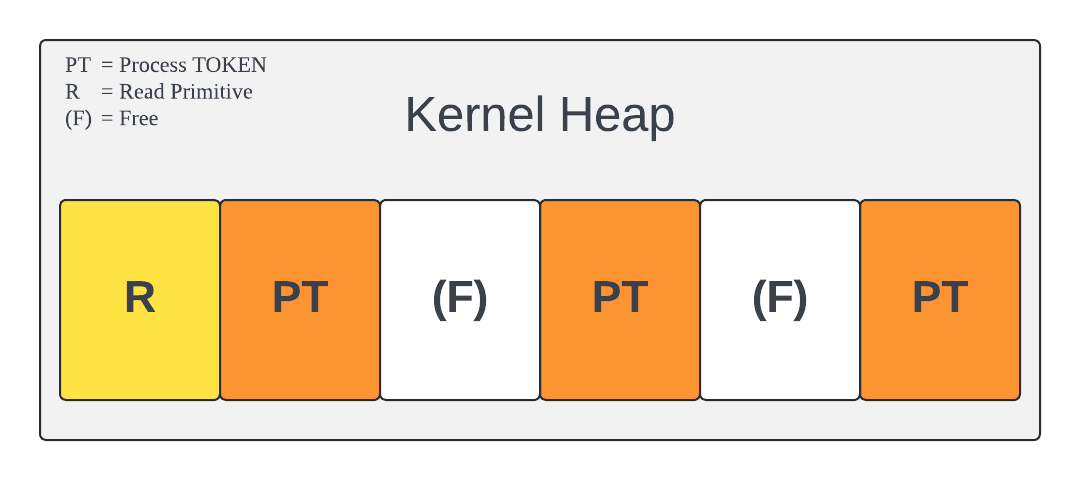

The read primitive enables us to verify the positions and values of the adjacent memory fields, ensuring that only the privileges field is altered while preserving the rest. Therefore, the read primitive allocation, too, must be in close proximity to both the write primitive allocation and the token allocation. The ideal setup would be something like this:

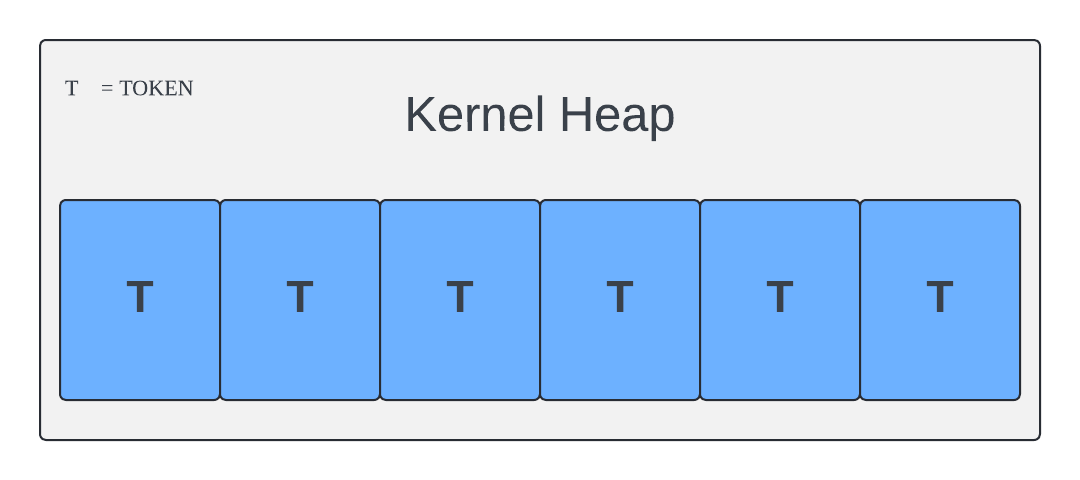

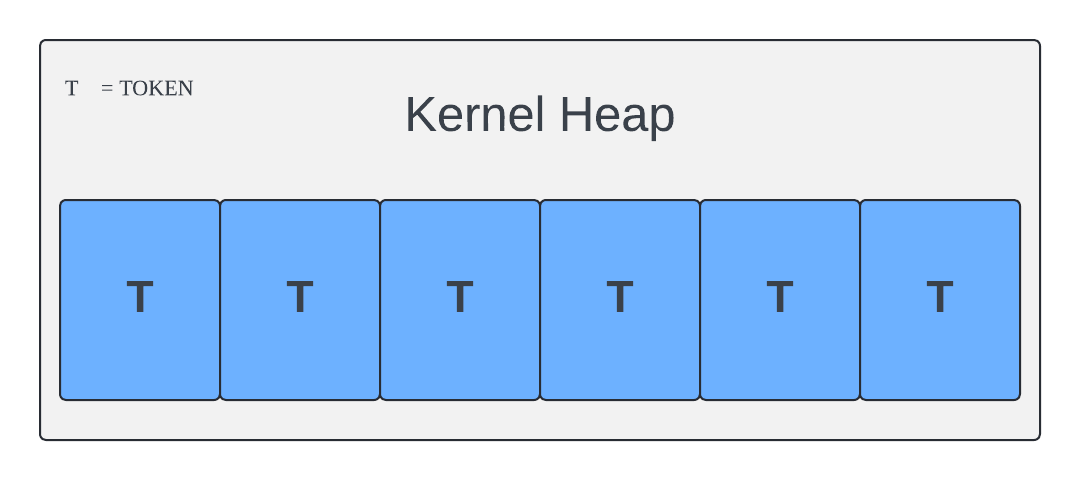

This layout can be created by performing several steps, starting with a heap spray using the token object. For this we first allocate many token objects to fill the kernel heap with tokens:

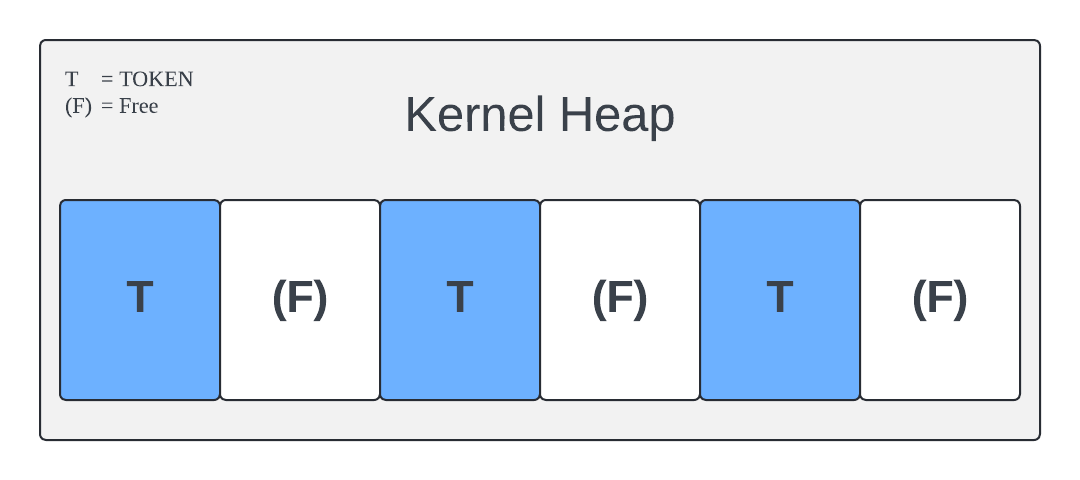

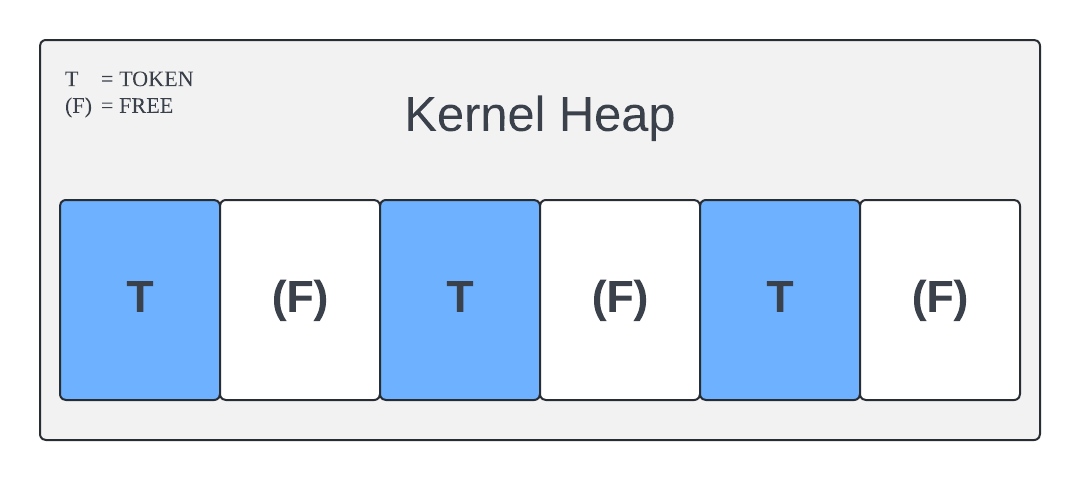

Then, by freeing every other token, we make holes between each allocation, giving us a layout like this:

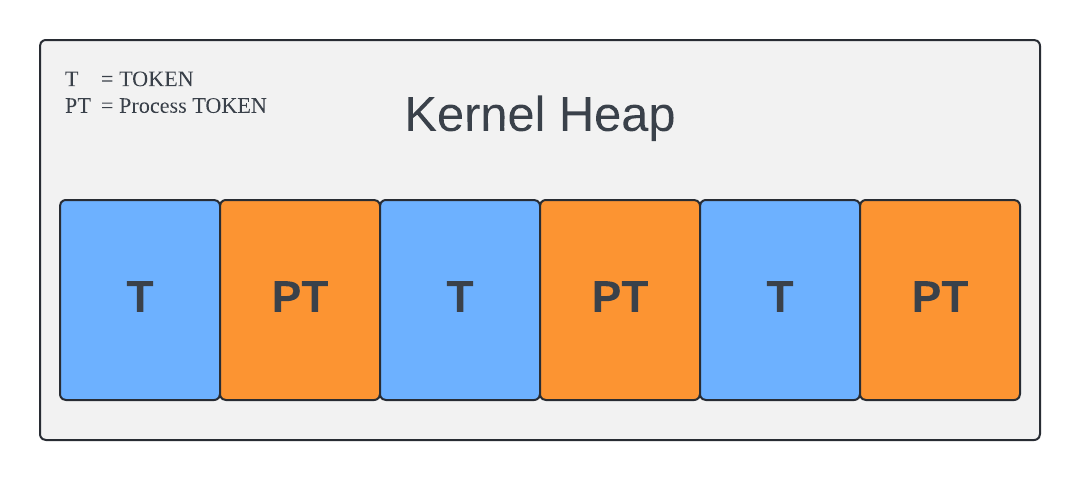

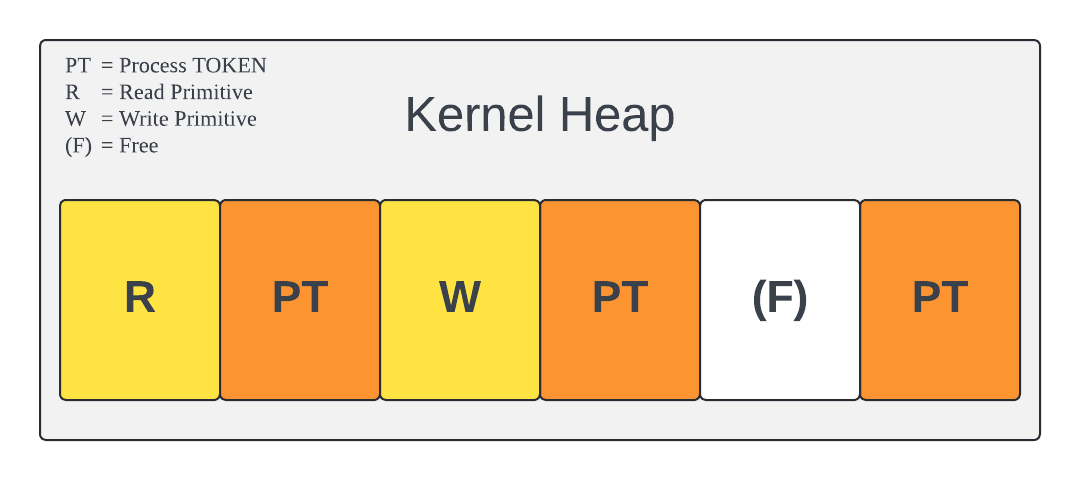

Next, we allocate our read and write primitive allocations into the holes left by our heap spray:

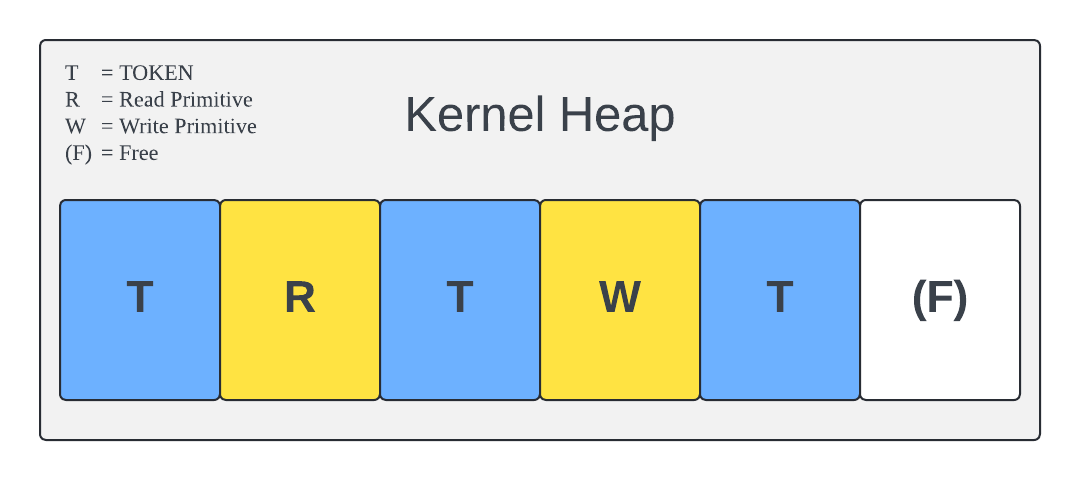

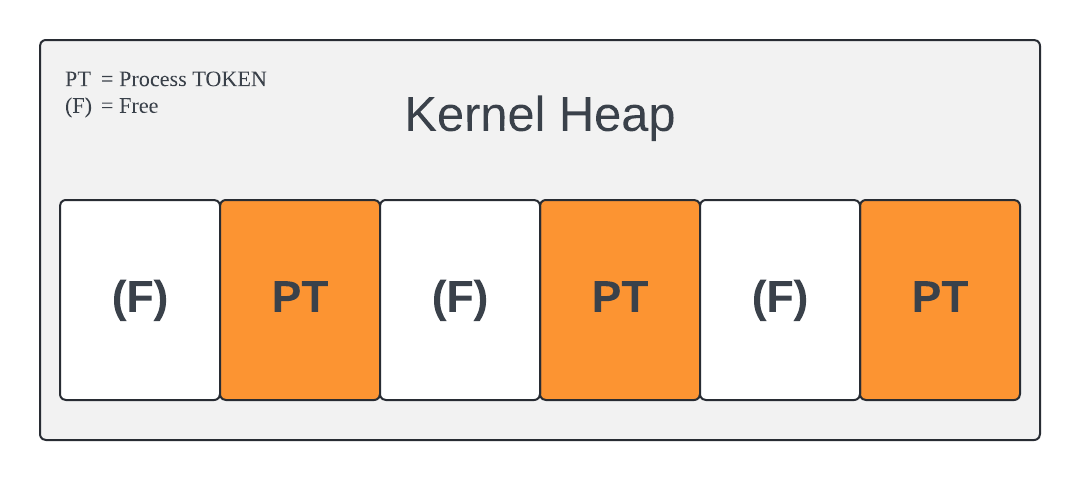

Then, at last, we can abuse the fact that we can read what comes after the write primitive's allocation for a precise overwrite of the (red colored) token's Privilege member:

Of course, things are never this easy in practice. Let's take a look at the hoops we had to jump through and the lessons learned from attempting to put theory into practice.

Heap Spray

The entire exploit relies on the fact that we are able to perform a heap spray. Achieving a stable and consistent heap spray is vital; one misstep can trigger a blue screen. As mentioned earlier, we want to target the variable size allocator. It is for this reason we must pick an object to attack that falls within the size range that the variable size allocator services. Among the objects that fall within this range, the token object is the most interesting candidate because being able to manipulate it leads to an immediate privilege escalation. With the target object selected, we have to now fill the heap with them to create a big surface area to abuse later.

Spraying token Objects

Spraying token objects is straightforward. Simply open your process's token and duplicate it multiple times. This should allocate many token objects on the kernel heap:

Creating Holes in the Spray

Creating holes in the spray is just as easy by calling CloseHandle() on the handle you want to free. However, if we just start freeing every other handle from user-mode there's a big chance this won't actually free up every other token on the kernel heap. Achieving the predictable state described above requires a more methodical approach:

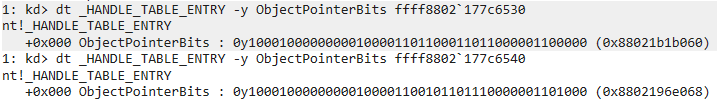

1. Initial Allocations: Initially, when allocating objects, they are placed in vastly different locations. If we allocate a few tokens and inspect the HANDLE_TABLE for two consecutive

HANDLE_TABLE_ENTRYs, we observe the following:

The ObjectPointerBits approximately indicate where the object is allocated in memory (shown in the parantheses). As shown, these point to vastly different areas, not consecutive locations.

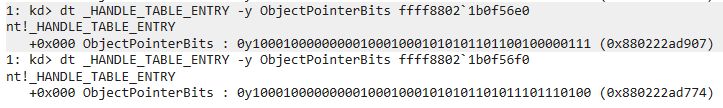

2. Repeated Allocations: Let's reinvestigate after doing 10,000 object allocations:

They're getting closer to each other, but we need a layout we can rely on 100% to be consecutive. If not, freeing every other handle is pointless, as the gaps might not be where we need them, risking allocation of our primitives in non-exploitable locations.

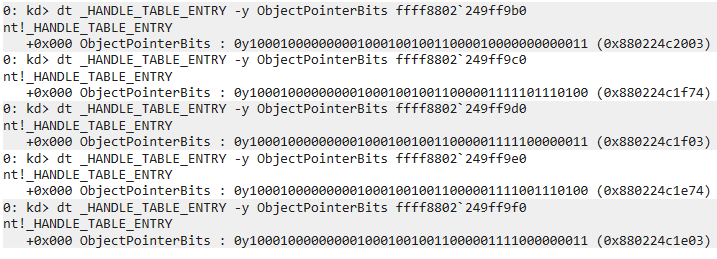

3. Achieving Predictability: Only after around 20,000 object allocations do we see consecutive addresses for the allocations (keeping in mind that a token is 710h bytes in size ):

Each of these tokens is referenced by a handle in user mode in the same order. Now, when we free every other handle, we should achieve a uniform heap with predictable holes, just as planned.

Another hurdle

During testing we found no way of using a duplicated token with manipulated privileges for privilege escalation. The CreateProcessWithtokenW API does allow creating a process using a duplicated token, like the one we sprayed on the heap. However, this function is unavailable to low privileged users as it requires an admin-only privilege, the SE_IMPERSONATE_NAME privilege. We are also able to use the duplicated token to set the main thread's token using SetThreadtoken , but there appears to be no way to abuse this either. Thus, we had to adjust our approach.

New Approach: Targeting Process tokens

Instead of attacking a duplicated token, we can target process tokens. A process token of a process we control to be exact. This way we're still attacking a token, as we set out to do, and we can reuse all the code we wrote so far. Only now we use proper primary tokens and not duplicated ones. These process tokens are created by the kernel every time a process is created and are the primary source for all access checks on windows processes. There's just one problem, if we try to do the same heap spray as before, spamming 20,000 token allocations, we will have to also allocate 20,000 processes. This is a bit excessive. To achieve the same predictable heap layout as before but with a process token to attack, and without the need to create many processes, we need to perform some additional steps:

1. Initial Spray: First, we spray 20,000 duplicated tokens to stabilize the heap's allocation state, like before.

2. Secondary Allocations: Next, we allocate around 200 more duplicated tokens. We will use these to create the holes. The duplicated tokens give us a state like this:

3. Creating Holes: Then, we free every second token to create holes, just like before:

4. Process Allocation: Next, we allocate processes for an executable whose privileges we want to elevate. These processes' tokens fill the holes we just created (shown in orange), giving us a reliable heap spray with process tokens:

5. Final Adjustments: Finally, we free the remaining duplicated token handles, leaving only holes between the process tokens:

This results in a reliable heap spray to attack the process tokens of a process we aim to elevate. Now, let's leverage these gaps to our advantage.

Kernel Heap Reconnaissance

With a consistent heap spray of process tokens and creating holes exactly where we want them, we have made significant progress towards exploitation. However, the heap spray is only just step 2 in a 13-step process for the final exploit. There's much more to consider and many constraints to work around to develop a reliable proof of concept. This all starts with using the read primitive to gather valuable information about the surrounding heap state.

Allocating the Read Primitive

First, we need to actually allocate the read primitive buffer. Allocating this as early as possible is key in allowing us to guide us throughout the setup phase. We use the read_prim_alloc function that we created at the start to allocate a buffer with a size equal to a token object ( 710h bytes). Thanks to our heap spray, we have 100 holes of this exact size waiting for us and we can reasonably assume it will land in one of them. Looking at how this translates to our heap layout:

Allocating the Write Primitive

Later on, we aim to allocate the write primitive buffer into the hole immediately after the read primitive, like so:

But how do we know whether the write primitive buffer landed there? Can we just look forward two allocations ( 710h * 2 ) number of bytes? Well, not exactly. There’s a tiny detail about the way the allocator handles these types of allocations that we haven't mentioned yet. Unlike what our simplified images show, these allocations aren't completely consecutive. A token allocation, including its headers, totals 710h bytes. A heap page is 1000h bytes. To maintain flexibility, the allocator avoids spanning allocations across the end of one page and the start of another. Thus, this allows for only 2 token allocations per page, leaving 0x1000 - 0x710 - 0x710 = 0x1E0 bytes of unused space at the end of each page. This means that an allocation either starts at the start of a page, or at offset 710h . Meaning that if we want to read 2 allocations forward, we have to always skip 1000h bytes. But this excess space between allocations introduces another problem.

Understanding Allocation Positions

Consider the scenario where we need to read all bytes after the write primitive allocation up to the Privileges member of the following token object. If the write buffer is allocated at the start of the page, the subsequent token object will be at offset 710h , immediately after the write primitive. Requiring us to read the token header bytes and all the bytes of the token object up until the Privileges member. However, if the write primitive buffer is allocated at offset 710h , the following token object will be at the start of the next page, leaving a gap of 1E0h bytes between the end of the write primitive buffer and the start of the next token object's header. Requiring us to read an additional 1E0h bytes.

Since we don't know in advance at which of these two positions within a page the write primitive buffer will land, we must account for this. This is why we must perform some preliminary reconnaissance of the heap state using the read primitive, allowing us to calculate in which of the two positions the write primitive will land.

Implementation

To calculate this, we use the read primitive to inspect the next 2-3 pages. With this we first verify that the read primitive is positioned directly before a token object. We do this by checking for the pool tag for token objects ( Toke ), which we expect to find in the header before a token object:

When we confirm the presence of a token object, we note its position relative to our read primitive buffer. If the Toke tag is found at offset 704h , as calculated from the start of the read primitive buffer, it indicates the read primitive is located at the start of a page. If it is found at offset 8E4h , it means the read primitive buffer is at the middle of the page. Knowing this, we can tell in which of the two possible positions the hole after the token is located. If the read primitive is at the start of the page, then the following token object is in the middle and the next free hole is, again, at the start of a page and vice versa.

With this information we are no longer necessarily bound to the layout described above. Ideally, we would place the write primitive in the hole after the first token, but we are also able to put it 2 or 3 holes further down. Because what if the hole after the first token happens to have been filled up in the time between the heap spray and now? To check this, we look ahead for the first hole we find within 3 pages. Finding holes just entails looping over the bytes that come after the read primitive, at the offsets we can expect a token to be:

Then, if a hole is found, we perform one more final check where we verify that there is a process token (not a duplicated one) after the hole. This will be the token belonging to one of the processes we spawned during the heap spray and will be the token we aim to attack later. We can check for this by looking at two members of the token object:

- TokenType

- ImpersonationLevel

If the TokenType is 1 and the ImpersonationLevel is 0, we are very likely to be looking at one of the primary process tokens that we created. The values for the duplicated tokens are 2 and 2, respectively. This check is performed by the following code:

Preparing the Payload

We spent a lot of effort into setting up and calculating everything for just one reason: we must load the overflow payload before we make the write primitive allocation. This is because the driver takes full ownership of the file that is used for the attack when allocating the buffer, meaning we can't alter the file's contents. When preparing the overflow payload we aim to overwrite the data, up to the Privileges member, on the kernel heap with the exact same data that is already there. Limiting the risk of corrupting kernel data. Of course, we can only write one series of bytes to file when performing the overflow so we have to know in advance the exact offset at which the write buffer will land.

Using all the information from the previous step, where we find a hole that has a process token after it, we take note of its offset relative to the read primitive. Using this offset plus the information on whether there's an additional 1E0h bytes of space, we read that many bytes up until the Privileges member of the token. Then, we write these exact bytes to the file we will use for the attack.

Next, we append the actual payload to the file. The aim of the attack is to elevate the token's privileges to the maximum amount of privileges (the same as the system process) by overwriting the Privileges member. Looking at the _SEP_TOKEN_PRIVILEGES struct member of the token object, we see it has three fields spanning a total of 24 bytes:

To obtain system like privileges we have to overwrite each of these fields with the maximum possible value: 0x1ff2ffffbc . With the entire payload written to file and the heap layout prepared, it is time to pull the trigger.

Exploitation

With everything prepared, there is just one more step to complete the full exploit. The last significant step is allocating the write primitive buffer exactly into the hole that we prepared. This sounds easy, but because we can only have one write primitive buffer active at a time it still requires some effort. The chances of the write primitive buffer landing in the exact right place straight away are very low. To nudge it into the right direction we have to use a little bit of strategy, such as the one we came up with:

-

Allocate the write primitive.

-

Use the read primitive to check if the write primitive was allocated in the right hole.

-

If not, free the write primitive.

-

Allocate two new (duplicated) token objects to fill up the incorrect hole.

-

Repeat steps 1-4 approximately 10,000 times.

-

If the correct allocation is still not made, free all the newly allocated duplicate token objects and start again from step 1.

In our experience, allocating two new objects per failed write allocation gave the best chance of filling the incorrect hole. Because there's a small chance, we might unintentionally fill the correct hole doing so, we free all extra allocations we made in step 6 and try again. If we allocate only one extra token per missed write allocation, the write primitive buffer will end up being allocated in the same location every time due to the allocation algorithm and timing. This would of course mean the write allocation would never get closer to where it has to go, but with the above strategy it will eventually make it.

If the previous steps executed correctly, it is finally time for the grand finale. The moment of truth: pulling the trigger on the attack. The last step involves calling ReadFile() on the device handle to complete the write primitive. Doing this triggers a callback in the Windows mount manager that calls the IRP_MJ_READ callback in the driver, which in turn overflows the kernel heap with our file's contents. If everything completes successfully, one of the processes we spawned during the heap spray should now have elevated privileges!

The exploit in action:

Conclusion

A blue screen triggered by our automation tooling for finding vulnerabilities in kernel drivers set us off on a lengthy journey. After investigating the blue screen, several more critical bugs emerged. We developed proof-of-concept exploits for each vulnerability and shipped these, along with a root cause analysis, to the software manufacturer. We emphasised that these vulnerabilities required a prompt fix, as they could lead to system memory corruption or even complete privilege escalation. The manufacturer quickly fixed the bugs, but we then questioned whether our assessment was entirely accurate. While having a read and write primitive in kernel mode memory can technically enable privilege escalation, we wondered if this was truly feasible given the constraints of this driver. This question led to the creation of this blog. To answer it: Yes, it is possible, but we only barely managed to overcome all the constraints. We might not be so lucky next time!

We are here for you

Need help with getting your organisation ready for DORA or wondering far along you your business currently is?

Get in touch and we will guide you with your next steps.